Artificial neural networks are a type of machine learning algorithm that is designed to simulate the way the human brain works. Similarly, ANNs consist of artificial neurons that are connected to each other to form a network. These connections can be adjusted to allow the network to learn from data and make predictions.

Artificial neural networks are a subset of deep learning, which is a type of machine learning that involves the use of neural networks with multiple layers. They have gained a lot of attention in recent years due to their ability to solve complex problems and make accurate predictions in various industries such as healthcare, finance, and marketing.

There are different types of artificial neural networks, each with its own unique structure and application. In this blog post, we will discuss five types of artificial neural networks and explain how each of these networks works their advantages and limitations, and their applications.

Also read: The Basics of Artificial Neural Networks (ANNs)

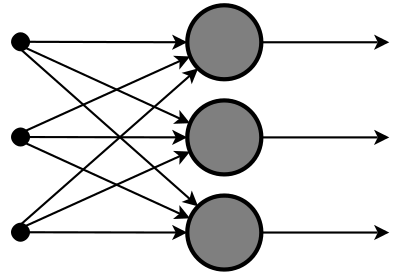

1. Feedforward Neural Networks

Feedforward neural networks are the most common type of neural network, also known as Multi-Layer Perceptron (MLP). In this network, the neurons are arranged in layers, and each neuron in one layer is connected to every neuron in the next layer. The information flows from input layers to output layers through hidden layers.

How feedforward neural networks work

In a feedforward neural network, each neuron in the input layer receives a value from the input data. This value is then multiplied by a weight and passed to the neurons in the first hidden layer.

Each neuron in the hidden layer then calculates its output based on the weighted sum of its inputs and applies an activation function to the result. This process is repeated for each subsequent hidden layer until the output layer is reached. The output layer produces the final output of the network.

The backpropagation algorithm is used to adjust the weights of the connections during the training process. This algorithm calculates the error between the predicted output and the actual output and adjusts the weights of the connections in the network to minimize this error.

Applications of feedforward neural networks

Feedforward neural networks are widely used in various applications, such as speech recognition, image classification, fraud detection, and sentiment analysis. They are particularly useful in applications where there is a large amount of input data and complex patterns need to be learned.

Feedforward neural networks have been used in a wide range of applications. Some examples include:

- Image recognition: Feedforward neural networks have been used to classify images in tasks such as identifying objects in images or recognizing faces.

- Speech recognition: Feedforward neural networks have been used to convert speech to text in tasks such as voice assistants or transcribing phone conversations.

- Natural language processing: Feedforward neural networks have been used to analyze and generate text in tasks such as language translation or sentiment analysis.

Feedforward neural networks are easy to implement and can handle large amounts of data. They are also good at learning patterns in the data and making accurate predictions. However, they have some limitations, such as their inability to handle sequential data and their tendency to overfit the data.

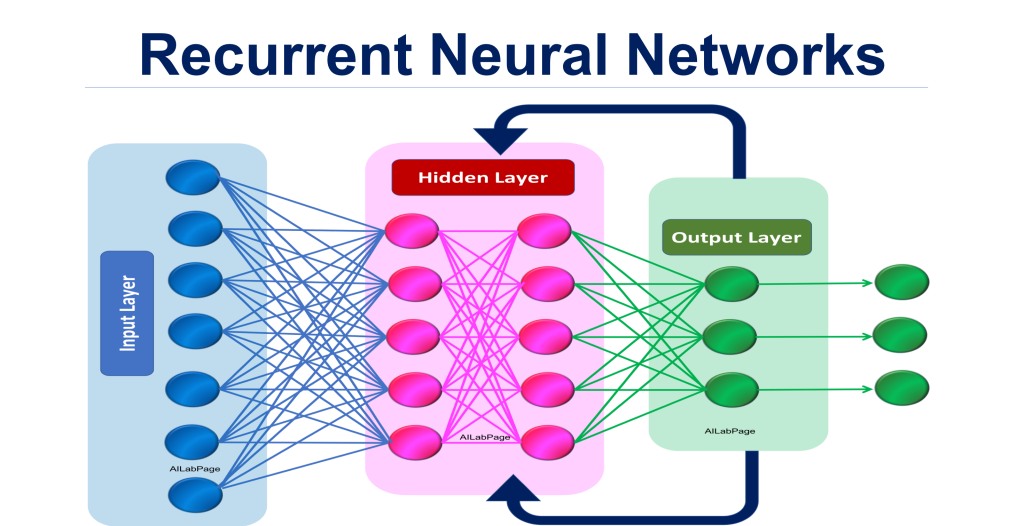

2. Recurrent Neural Networks

Recurrent neural networks are a type of neural network that can process sequential data. They have loops in their architecture that allow them to maintain an internal state or memory. This memory enables the network to process sequences of input data and make predictions based on the previous inputs.

How recurrent neural networks work

The Recurrent Neural Network operates on the tenet that the output of a layer is saved and fed back into the input to aid in layer prediction.

Here, the first layer is created using the total of the weights and the features, much like in a feed-forward neural network. Once this is calculated, the recurrent neural network process begins, which means that each neuron will retain some of the information it had in the prior time step from one-time step to the next. This causes every neuron to function computationally like a memory cell.

Innovative Tech Solutions, Tailored for You

Our leading tech firm crafts custom software, web & mobile apps, designed with your unique needs in mind. Elevate your business with cutting-edge solutions no one else can offer.

Start NowWe must allow the neural network to operate on front propagation during this process and retain the data it requires for later use. If the forecast is incorrect in this case, we can use the learning rate or error correction to make minor adjustments so that the backpropagation will eventually lead to the correct prediction.

Applications of recurrent neural networks

Recurrent neural networks have been used in a wide range of applications. Some examples include:

- Speech recognition: Recurrent neural networks have been used to convert speech to text in tasks such as voice assistants or transcribing phone conversations.

- Natural language processing: Recurrent neural networks have been used to analyze and generate text in tasks such as language translation or chatbots.

- Time series prediction: Recurrent neural networks have been used to make predictions based on time series data, such as stock prices or weather forecasts.

Recurrent neural networks are good at processing sequential data and can maintain an internal state or memory. They are also capable of making predictions based on previous inputs. However, they have some limitations, such as their tendency to suffer from the vanishing gradient problem and their difficulty in learning long-term dependencies.

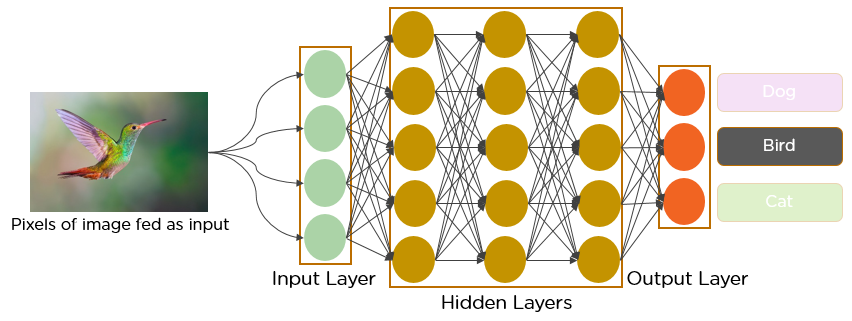

3. Convolutional Neural Networks

Convolutional neural networks are a type of neural network that are specifically designed for processing image and video data. They use convolutional layers, pooling layers, and fully connected layers to extract features from the input data and make predictions.

How convolutional neural networks work

In a convolutional neural network, the input data is typically an image or a video. The network consists of multiple layers, including convolutional layers, pooling layers, and fully connected layers.

Convolutional layers are responsible for extracting features from the input data. They do this by applying a set of filters to the input data and producing a set of feature maps. Each filter is a small matrix of weights that is applied to a small portion of the input data. The output of the convolutional layer is a set of feature maps, where each feature map represents a different set of features extracted from the input data.

Pooling layers are used to reduce the size of the feature maps and prevent overfitting. They do this by down-sampling the feature maps and reducing their resolution. This makes the network more robust to small variations in the input data.

Fully connected layers are used to make predictions based on the features extracted from the input data. They take the output of the convolutional and pooling layers and produce the final output of the network.

Applications of convolutional neural networks

Convolutional neural networks have been used in a wide range of applications. Some examples include:

- Image classification: Convolutional neural networks have been used to classify images into different categories, such as identifying objects in images or recognizing faces.

- Object detection: Convolutional neural networks have been used to detect objects in images or videos and locate them in the image.

- Image segmentation: Convolutional neural networks have been used to segment images into different regions based on their content.

Convolutional neural networks are specifically designed for processing image and video data and are good at learning patterns in the data. They are also capable of handling large amounts of data and can make accurate predictions. However, they have some limitations, such as their inability to handle variable-length inputs and their tendency to overfit the data.

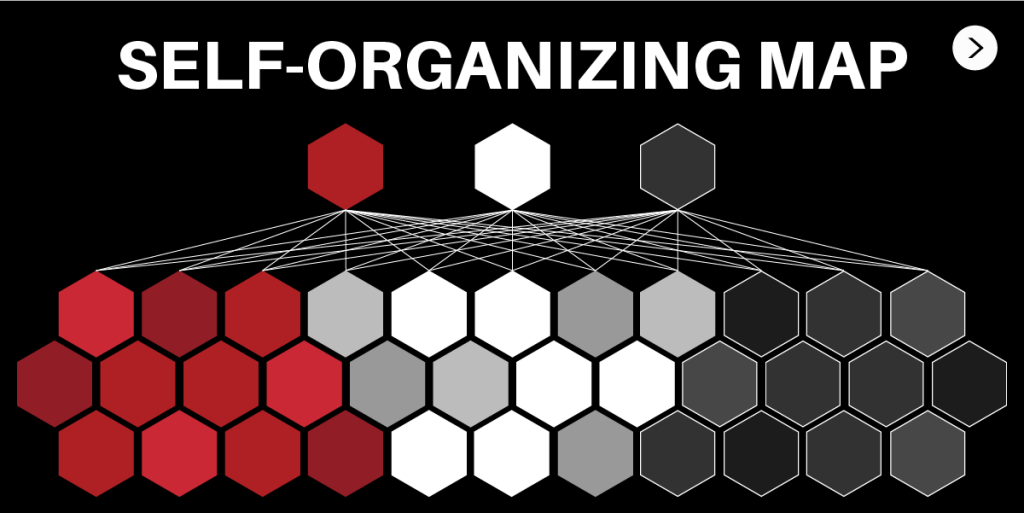

4. Kohonen Self-Organizing Maps

Kohonen self-organizing maps are a type of neural network that is used for unsupervised learning. They are used to identify patterns in data and group similar data points together.

How self-organizing maps work

In a self-organizing map, the network consists of an input layer and a map layer. The input layer receives the input data, and the map layer consists of a set of neurons arranged in a two-dimensional grid.

During the training process, the input data is presented to the network, and each neuron in the map layer is assigned a weight vector. The weight vectors are adjusted based on the similarity between the input data and the weight vectors of the neurons. Neurons that are close to each other in the map layer have similar weight vectors and are therefore grouped together.

After the training process is complete, each neuron in the map layer represents a group of similar input data points. New input data can be presented to the network, and the neuron with the closest weight vector to the input data represents the group to which the input data belongs.

Applications of self-organizing maps

Self-organizing maps have been used in a wide range of applications. Some examples include:

- Data visualization: Self-organizing maps can be used to visualize high-dimensional data in a two-dimensional map. This can help identify patterns and relationships in the data.

- Clustering: Self-organizing maps can be used to cluster similar data points together. This can be used for tasks such as customer segmentation or identifying similar products.

- Anomaly detection: Self-organizing maps can be used to identify data points that are significantly different from the rest of the data. This can be used for tasks such as fraud detection or identifying faulty products.

Self-organizing maps are good at identifying patterns in data and grouping similar data points together. They are also capable of visualizing high-dimensional data in a two-dimensional map. However, they have some limitations, such as their tendency to produce biased results and their difficulty in handling large amounts of data.

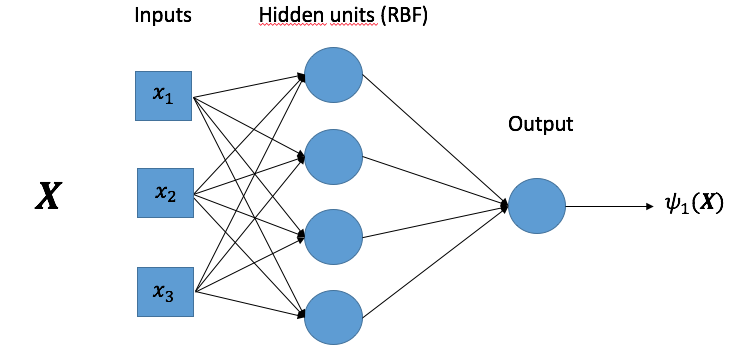

5. Radial Basis Function Networks

Radial basis function (RBF) networks are a type of artificial neural network that is commonly used for function approximation and classification problems. They are based on the concept of radial basis functions, which are mathematical functions that vary depending on their distance from a given point.

How radial basis function networks work

In an RBF network, the input data is first processed by a set of hidden neurons, each of which corresponds to a radial basis function. The output of each hidden neuron is determined by its distance from the input data, and its activation function is determined by the radial basis function.

After the hidden layer processes the input data, the output layer combines the outputs of the hidden neurons to produce the final output of the network.

During the training process, the weights of the connections between the input layer and the hidden layer are adjusted to optimize the performance of the network. The weights of the connections between the hidden layer and the output layer are typically fixed.

Applications of radial basis function networks

RBF networks have been used in a wide range of applications. Some examples include:

- Function approximation: RBF networks can be used to approximate complex mathematical functions, such as weather forecasting or financial modeling.

- Classification: RBF networks classify data, identifying spam emails or detecting cancer cells by placing them into different categories.

- Time series prediction: RBF networks predict future values in time series data, like stock prices or energy demand.

RBF networks excel at approximating complex functions with few hidden neurons and handling noisy input data. They are also less prone to overfitting than some other types of neural networks.

Yet, they possess limitations, including vulnerability to local minima and challenges in handling high-dimensional data. They also require more computational resources than some other types of neural networks thus making them potentially more expensive.

Seamless API Connectivity for Next-Level Integration

Unlock limitless possibilities by connecting your systems with a custom API built to perform flawlessly. Stand apart with our solutions that others simply can’t offer.

Get StartedConclusion

There are many different types of artificial neural networks, each with its own strengths and limitations. Understanding neural networks and their applications helps in choosing the right network for your specific task. As artificial neural networks continue to evolve, they will undoubtedly become even more powerful.

Before You Go…

Hey, thank you for reading this blog to the end. I hope it was helpful. Let me tell you a little bit about Nicholas Idoko Technologies. We help businesses and companies build an online presence by developing web, mobile, desktop, and blockchain applications.

We also help aspiring software developers and programmers learn the skills they need to have a successful career. Take your first step to becoming a programming boss by joining our Learn To Code academy today!

Be sure to contact us if you need more information or have any questions! We are readily available.